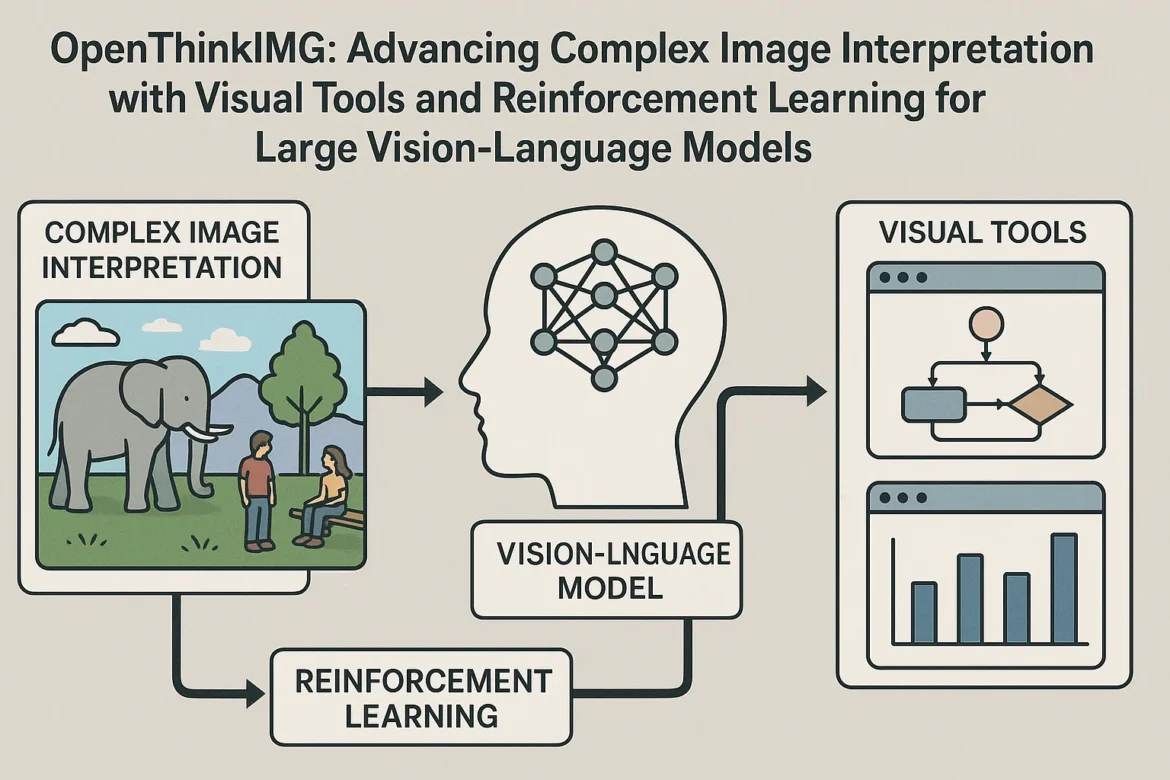

In the rapidly evolving landscape of artificial intelligence, the ability to interpret complex images with precision and adaptability is more crucial than ever. As industries ranging from healthcare to finance demand deeper insights from visual data, traditional models often fall short, especially when faced with tasks that require iterative reasoning, dynamic interaction, and context-aware analysis. Enter OpenThinkIMG, an open-source framework that empowers Large Vision-Language Models (LVLMs) to “think with images” by seamlessly combining advanced visual tools and reinforcement learning. This comprehensive article explores how OpenThinkIMG is revolutionizing the field of complex image interpretation, why its integration of tool-based reasoning and reinforcement learning sets it apart, and how leveraging long-tail keywords and SEO strategies can help this technology reach the audiences who need it most.

The Need for Advanced Visual Reasoning in Artificial Intelligence

The demand for complex image interpretation using reinforcement learning and visual tools has surged as organizations seek to automate processes that previously required expert human analysis. Standard LVLMs can describe images or answer basic questions. Still, they often struggle with tasks that require multiple steps, such as extracting precise data from scientific charts, highlighting anomalies in medical scans, or understanding intricate relationships in industrial diagrams. These challenges stem from the models’ reliance on passive observation and static, pre-labelled datasets, which do not equip them for the dynamic, interactive reasoning required in real-world scenarios.

OpenThinkIMG addresses these limitations by introducing a new paradigm: instead of merely “seeing” images, AI models can now interact with them, using modular visual tools to sketch, highlight, extract, and manipulate image regions. This active engagement, combined with reinforcement learning, enables LVLMs to refine their understanding iteratively, adapt to novel tasks, and generalize learned strategies across domains. The result is a significant leap in performance, particularly on tasks that demand nuanced, multi-step reasoning and precise tool use.

OpenThinkIMG’s Architecture: Modular Visual Tools Meet Reinforcement Learning

At the core of OpenThinkIMG is its flexible architecture, which brings together a robust visual tool management system and a novel reinforcement learning framework called V-TOOLRL. The visual tool ecosystem includes over fifty pre-built modules-such as region-of-interest analyzers, chart data extractors, semantic segmenters, and text extractors, each with standardized interfaces for easy integration and extension. These tools operate as independent microservices, allowing for distributed deployment, parallel processing, and fault tolerance. This modularity ensures that OpenThinkIMG can scale to handle the most demanding image interpretation tasks, from high-resolution medical imaging to real-time industrial monitoring.

The real breakthrough, however, lies in OpenThinkIMG’s approach to reinforcement learning for complex image interpretation. Traditional supervised fine-tuning (SFT) methods rely on static, pre-recorded tool-use trajectories, which limit the model’s adaptability and generalization. OpenThinkIMG’s V-TOOLRL framework enables LVLMs to learn optimal tool-use strategies through trial and error, receiving feedback based on task success and efficiency. This means that, like a human analyst, the AI can experiment with different tool combinations, learn from its mistakes, and discover new ways to solve visual reasoning challenges. The result is a system that performs better on known tasks and adapts to new, unseen scenarios with minimal retraining.

Real-World Applications: From Medical Imaging to Financial Chart Analysis

The impact of OpenThinkIMG’s approach to interactive visual reasoning with reinforcement learning is already felt across various industries. In healthcare, the framework enables AI systems to analyze complex medical scans, highlight regions of interest, and extract quantitative data with a level of precision and adaptability previously unattainable. For example, a radiologist can use OpenThinkIMG to automatically identify and annotate tumours, measure their growth over time, and generate detailed reports, all while the AI learns to optimize its tool use for each specific case.

In finance, OpenThinkIMG excels at extracting and interpreting data from intricate charts and graphs, such as those found in earnings reports or market analyses. By leveraging its suite of visual tools and reinforcement learning capabilities, the framework can accurately read values, detect trends, and flag anomalies, providing analysts with actionable insights in real-time. The same principles apply to industrial inspection, where OpenThinkIMG can monitor complex machinery, identify potential faults, and suggest maintenance actions, all through dynamic, tool-augmented visual reasoning.

OpenThinkIMG’s Open-Source Ecosystem: Accelerating Research and Innovation

One of the most compelling aspects of OpenThinkIMG is its commitment to open-source development. By making its codebase, pre-trained models, and tool libraries freely available, the framework invites researchers, developers, and organizations to contribute, customize, and extend its capabilities. The OpenThinkIMG GitHub repository provides comprehensive documentation, training scripts for supervised and reinforcement learning, and a growing collection of community-contributed tools. This collaborative approach accelerates the pace of innovation and ensures that the technology remains accessible to a wide range of users, from academic researchers to industry practitioners.

For those looking to get started, the repository includes detailed instructions for setting up the vision tool infrastructure, training models with V-TOOLRL, and deploying the system in real-world applications. The open-source nature of OpenThinkIMG also means that users can develop and share new visual tools, benchmark their performance on diverse datasets, and contribute to the evolution of tool-augmented visual reasoning in AI.

SEO Strategies: Leveraging Long-Tail Keywords for Maximum Reach

To ensure that content about OpenThinkIMG reaches its intended audience, AI researchers, industry professionals, or curious learners, it is essential to implement robust SEO strategies centred around long-tail keywords for complex image interpretation using reinforcement learning and visual tools. Long-tail keywords, such as “best open-source framework for tool-augmented image analysis,” “reinforcement learning for visual tool use in LVLMs,” and “interactive visual reasoning for medical imaging AI,” may have lower individual search volumes but are highly specific and aligned with user intent. According to SEO experts and industry research, these keywords drive higher conversion rates, attract targeted traffic, and improve the likelihood of ranking for niche queries.

When crafting content, it is essential to incorporate these long-tail keywords naturally into headings, introductions, and throughout the body text. This not only boosts search engine visibility but also ensures that the article addresses its readers’ precise questions and needs. For example, a section titled “How reinforcement learning enhances visual tool use for complex image interpretation in open-source AI frameworks” is more likely to attract and satisfy users searching for advanced, actionable information. Additionally, organizing content into topic clusters and linking related articles can improve SEO performance and user engagement.

Performance Benchmarks: Outperforming Larger Models with Efficient Tool Use

OpenThinkIMG’s innovative approach to reinforcement learning for visual tool selection in LVLMs has yielded impressive results on various benchmarks. In recent evaluations, a 2-billion parameter model trained with V-TOOLRL outperformed much larger models, including GPT-4.1, on challenging chart reasoning tasks and complex visual question answering. This demonstrates that the quality of tool use and adaptive reasoning can outweigh sheer model size, especially when dealing with tasks that require deep, multi-step analysis.

The framework’s ability to generalize learned tool-use strategies to new domains is particularly noteworthy. By training on synthetic trajectories generated by advanced language models like GPT-4o, OpenThinkIMG can quickly adapt to novel tasks, reducing the need for extensive manual annotation or retraining. This adaptability makes it an ideal solution for organizations seeking to deploy AI systems in dynamic, real-world environments where requirements and data types may change over time.

Ethical Considerations and Future Directions in Tool-Augmented Visual Reasoning

As with any powerful AI technology, deploying OpenThinkIMG raises critical ethical considerations. Ensuring that visual tools are used responsibly, that models are trained on diverse and representative datasets, and that outputs are transparent and explainable are critical to building trust and avoiding unintended consequences. The open-source community plays a vital role in addressing these challenges, fostering a culture of transparency, accountability, and continuous improvement.

The future of tool-augmented visual reasoning with reinforcement learning is bright. Researchers are already exploring extensions of OpenThinkIMG to video analysis, 3D scene understanding, and multimodal interaction, where AI agents can simultaneously reason across images, text, and other data types. As the framework evolves, its modular architecture and open-source ethos will continue to drive innovation, enabling new applications and breakthroughs in fields as diverse as autonomous vehicles, scientific research, and creative arts.

Getting Started with OpenThinkIMG: Resources and Community Support

For those eager to explore or contribute to OpenThinkIMG, a wealth of resources is available:

- Official Paper: OpenThinkIMG: Learning to Think with Images via Visual Tool Reinforcement Learning (PapersWithCode)

- GitHub Repository: OpenThinkIMG on GitHub

- Video Overview: OpenThinkIMG: LVLMs Think With Images – AI Research Roundup

The open-source community is active and growing, with regular updates, new tool releases, and collaborative research projects. Whether you are an AI developer seeking to implement reinforcement learning for interactive image interpretation, a researcher interested in benchmarking new visual tools, or an industry leader looking to deploy advanced LVLMs in production, OpenThinkIMG offers a powerful, flexible, and future-proof solution.

Conclusion: OpenThinkIMG as the Future of Adaptive Image Understanding

In summary, OpenThinkIMG stands at the cutting edge of AI-driven image interpretation, combining the strengths of modular visual tools and reinforcement learning to enable LVLMs to “think with images” in ways that closely mirror human reasoning. Its open-source framework, robust performance, and adaptability make it an essential resource for anyone seeking to push the boundaries of what artificial intelligence can achieve in complex visual domains. By embracing long-tail keywords and SEO best practices, content creators can ensure that the transformative potential of OpenThinkIMG reaches the audiences who need it to drive innovation, accelerate research, and shape the future of intelligent visual reasoning.

Frequently Asked Questions about OpenThinkIMG

What is OpenThinkIMG?

OpenThinkIMG is an open-source framework that combines visual tools and reinforcement learning to enable Large Vision-Language Models (LVLMs) to perform complex image interpretation through interactive, tool-augmented reasoning.

How does OpenThinkIMG use reinforcement learning?

OpenThinkIMG employs a reinforcement learning framework called V-TOOLRL, allowing AI models to learn optimal strategies for using visual tools through trial and error, improving performance on tasks that require iterative and adaptive reasoning.

What types of visual tools are included in OpenThinkIMG?

The framework includes over fifty modular visual tools such as region-of-interest analyzers, text extractors, chart data extractors, and semantic segmenters, all designed to support complex image analysis tasks.

In which industries can OpenThinkIMG be applied?

OpenThinkIMG is useful in healthcare (medical imaging), finance (chart and document analysis), industrial inspection, scientific research, and any domain requiring advanced, interactive